Pricefx Classic UI is no longer supported. It has been replaced by Pricefx Unity UI.

Data Loads

Data Load represents a task/process for data manipulation between the Analytics objects, such as uploading data from Data Feed to Data Source, deleting rows from Datamart, calculation of new value of the fields.

Most Data Loads are created automatically (when you deploy a Data Source or Datamart) but you can also create them manually (e.g., a calculation Data Load to manipulate the data).

Data Loads provide the following actions:

| Type | Description | Available for |

|---|---|---|

| Truncate | Deletes (all/filtered) rows in the target. Note: When a Data Source is deployed, the Truncate Data Load of the linked Data Feed is updated with a filter to include only rows previously successfully flushed to Data Source and it is scheduled to run once a week. This applies only if there is no other filter or schedule already defined. Incremental mode is no longer available for Truncate jobs. For older jobs (created before upgrade to Collins 5.0 release) where this option was enabled, it will stay enabled. If you disable the Incremental option the check-box will become non-editable and you will not be able to enable the option again. For Data Loads saved with the Incremental option off, the check-box is completely hidden. |

|

| Flush | Copies data from the Data Feed into the Data Source or from Data Source to Datamart. It can also convert values from string to proper data types set in the Data Source. It can copy everything or just new data (i.e. incremental Data Load). |

|

| Refresh | Copies data from Data Sources (configured in the Datamart fields) into the target Datamart. It can copy everything or just new data (i.e. incremental Data Load).

|

|

| Calculation | Applies a logic (defined in Configuration) to create new rows, or change/update values in existing rows in the target Data Source or Datamart. The calculation can take data from anywhere, e.g. Price Setting tables. Example usage:

|

|

| Calendar | Generates rows of the built-in Data Source "cal" and you get a Gregorian calendar. (If you need any other business calendar, just upload the data into the "cal" Data Source from a file or via integration and do not use this Data Load). | |

| Customer | Special out-of-the-box Data Load which copies data from the Price Setting table "Customer" into the Data Source "Customer". | |

| Product | Special out-of-the-box Data Load which copies data from the Price Setting table "Product" into the Data Source "Product". | |

Simulation | Applies a logic to the data as defined by the simulation for which the Data Load was created. |

|

| Internal Copy | Copies the data from a source into the Data Source table. The source here can be:

As this action supports the Incremental option and the Source Filter option for Analytics Rollups, it allows you to create historical Data Sources based on Analytics Rollups. (Otherwise Data Sources based on rollups behave like a materialized view – cloning the exact data of the rollup). The incLoadDate is hidden in the UI and if it is passed in the API request, it is ignored. |

|

| Index Maintenance | This task can be run to repair indexes associated with the target Data Source or Datamart, typically after backend DB migration. The task should be run only in these special circumstances, not on a regular or scheduled basis. We also strongly recommend consulting Pricefx support before you run this task. |

|

| Calculation+ | Allows you to split a large set of data into batches and process them independently. See Distributed Calculation for details. |

|

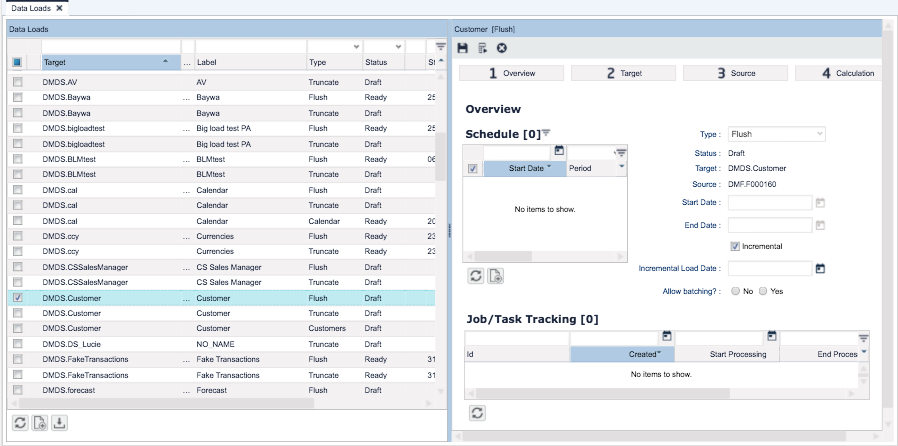

The Data Load page consists of:

- Data Loads list on the left.

Data Loads are organized in a grid, so that you can easily filter just the relevant Data Loads. In the first column 'Target' you will find what object the Data Load is for.

In this list, the Delete button only shows if all selected Data Loads can be deleted, i.e. they are either invalid (e.g. their target object is deleted), or they are not the default Data Load created by the system. (These system generated Data Loads cannot be deleted by a user, only if they are invalid.)

- Data Load job details on the right. At the top there are icons to run the Data Load manually or cancel the load . (Data Loads have a default timeout of 48 hours to accommodate even large and complex jobs. If needed, the jobs can be cancelled here.)

Large amounts of data (more than 5 million rows) can be processed in batches. By default, batch processing is enabled for Flush operations and disabled for Calculation. You can override this setting using the Allow batching? option. (The batch size is an instance parameter and the default value is 2 million rows. There must not be any dependencies between rows belonging to different batches.)

Depending on the Data Load type, you will find here some of these sections:- Overview – Summarizes the basic information on the Data Load. Here you can also schedule the Data Load manually (described below). The Job/Task Tracking section at the bottom shows the status for each task of the Data Load.

You can select a validation logic that will validate the target data after refresh. The following rules apply:The target Datamart name is available in the validation logic through the "dmName" binding variable:

def dmName = api.getBinding("dmName")- Use the name to query for the Datamart's data and apply custom validation rules.

Raise a warning with a custom validation message when a validation rule is not satisfied:

api.addWarning("Missing value in field1")- When api.addWarning() is invoked, the data validation is considered as failed and the Data Load's status is set to Error. Note that the validation logic result does not affect the Data Load process itself as it is run after the Data Load is completed.

- Validation messages passed from validation logic are present among the Data Load's calculation messages and can be viewed in the UI.

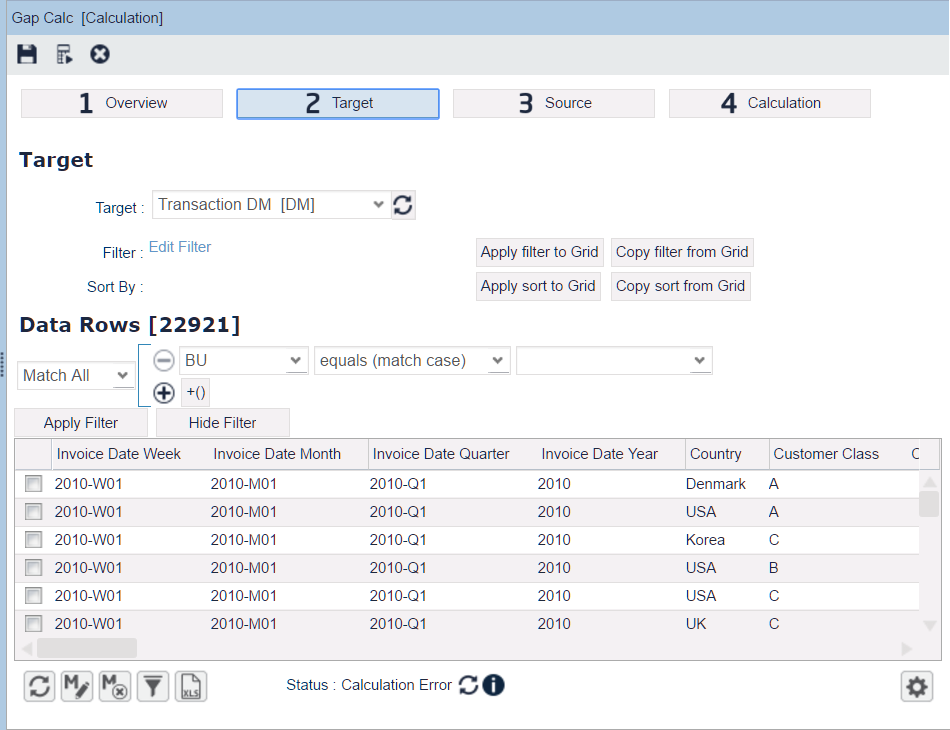

- Target – Specifies the target of the data coming from the Data Load operation.

- For Flush operation, the target is one of the Data Sources.

- For Refresh, the target is one of the Datamarts.

- For Calculation, the target is either Data Source or Datamart which you would like to update or enrich with columns.

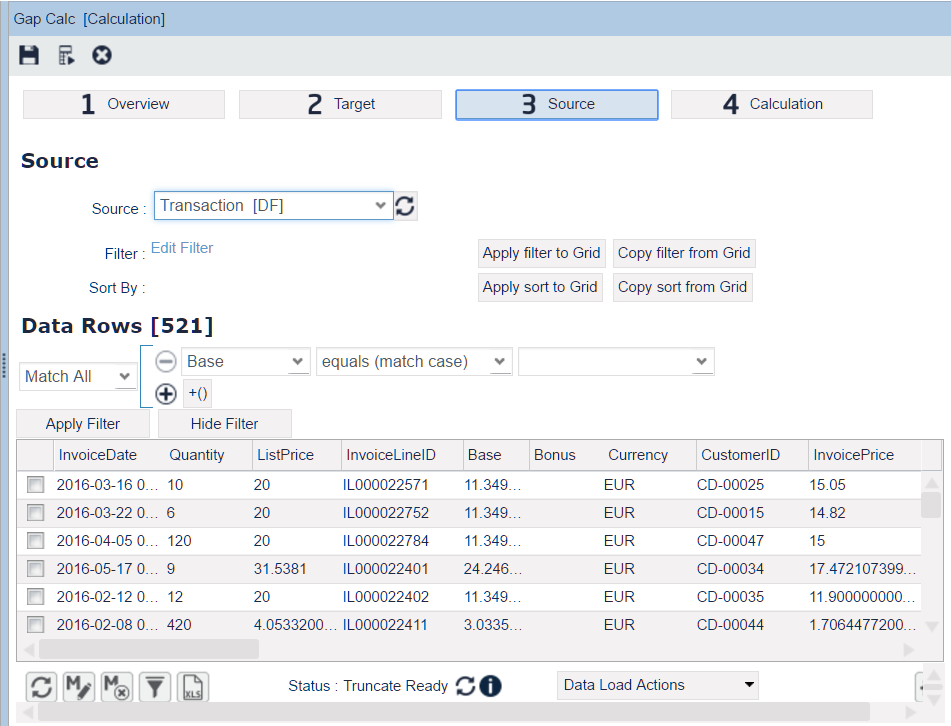

- Source – Specifies the source of data.

- For Flush operation, it will be a Data Feed.

- For Refresh it is not specified because there can be more sources for one target Datamart. Those source Data Sources are specified in the columns definition of the Datamart.

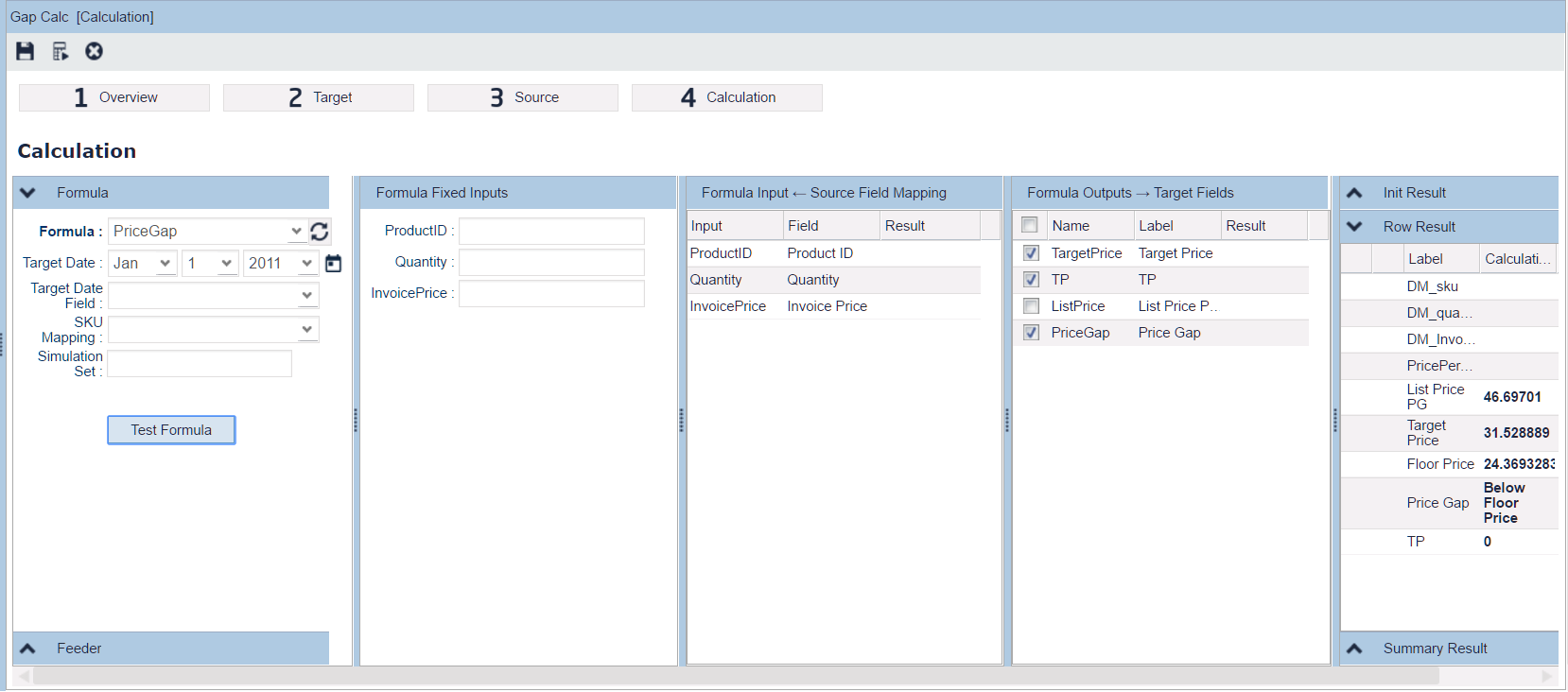

- Calculation – Specifies the logic to be executed. Such logic is set up in Configuration > Calculation Logic > Analytics. It can manipulate the data coming from the Source in many ways, e.g. filter the incoming rows from Source, create new lines for Target, modify/enrich/transform the data being copied from the Source into the Target.

If you leave the Target Date field empty, the calculation will use "today" as the target date.

Additionally, there can be a Feeder logic (defined as a Generic logic) which supplies the data objects to be processed (typical usage is Rebate Records Allocation to DM).

- Overview – Summarizes the basic information on the Data Load. Here you can also schedule the Data Load manually (described below). The Job/Task Tracking section at the bottom shows the status for each task of the Data Load.

Found an issue in documentation? Write to us.